Getting Started with PCI DSS Compliance

Posted by: Kyle Dimitt

If your business is involved in the processing of credit card payments, you are likely required to comply with the Payment Card Industry Data Security Standards (PCI DSS). Navigating the questions around PCI DSS compliance and what you need to…

Read More

April 16, 2024

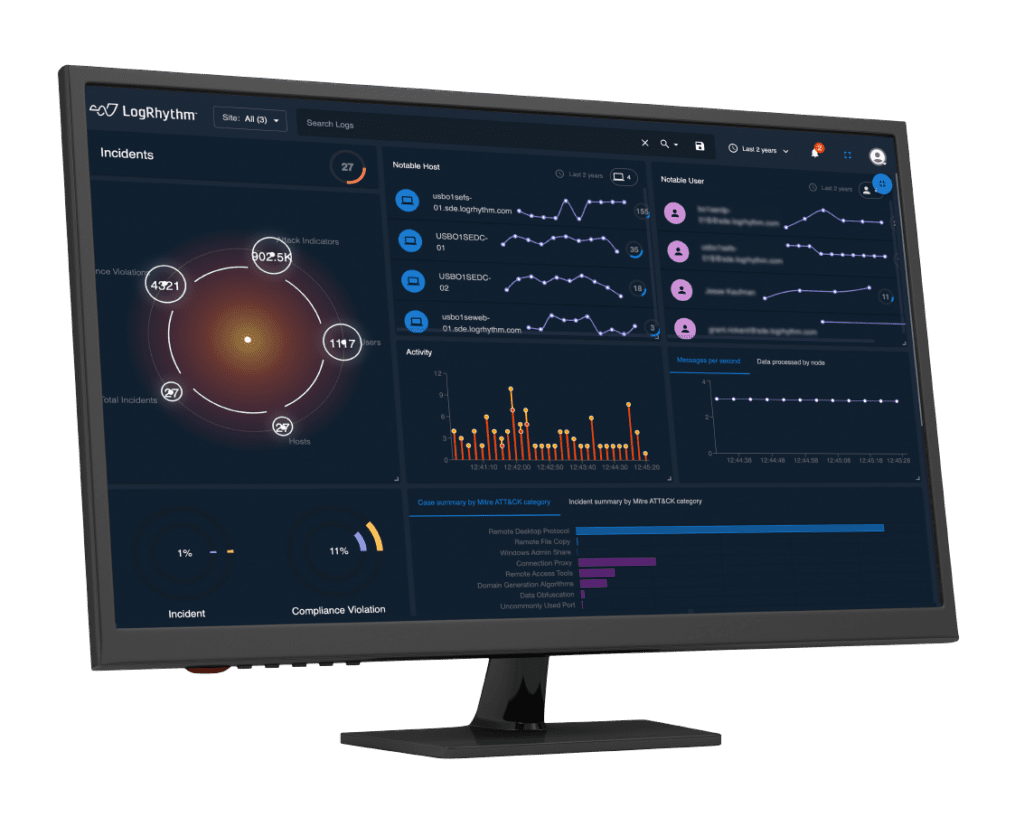

Secure a Faster Time to Value With LogRhythm Axon

Posted by: Jeff Week

LogRhythm Axon was built from the ground-up so that security teams can focus on the actual job of cybersecurity. With LogRhythm Axon, security teams can immediately realize the value of the platform as they do not have to focus on…

Read More

April 1, 2024

Cut Dashboard Noise and Easily Retire Log Sources with LogRhythm 7.16

Posted by: Jake Haldeman

At LogRhythm, we’re focused on making your security journey easier with feature releases every 90 days for our self-hosted security information and event management (SIEM) platform, LogRhythm SIEM. As part of our latest quarterly release, we’re introducing a new feature…

Read More

April 1, 2024

Q1 2024 Success Services Use Cases

Posted by: Scott Chambers

As part of the Subscription Services team, LogRhythm consultants work with customers to help bolster their defenses against cyberthreats and to improve the effectiveness of their security operations. While working on certain use cases this quarter, we surfaced that Living…

Read More

March 29, 2024

4 PCI DSS Compliance Questions Every Security Analyst Should Ask

Posted by: Kyle Dimitt

If you are involved in taking payment for a good or service of any kind, you are likely required to comply with the Payment Card Industry Data Security Standards (PCI DSS) requirements. This comprehensive security framework and compilation of best practices…

Read More

March 21, 2024

Key Components of a Robust Cloud Security Maturity Strategy

A cloud security maturity strategy is dynamic and evolves over time to address new threats, technologies, and business requirements. It involves a holistic and proactive approach to security, emphasizing continuous improvement and adaptability in the ever-changing landscape of cloud computing.…

Read More

March 18, 2024